- May 11, 2021 Technology

Amid US-China Competition over AI Capabilities, EU Projects Regulatory Power

Last month the European Commission (EC) released its far-reaching proposal for regulating artificial intelligence (AI), the “Artificial Intelligence Act.” Intended to create a unified set of rules across the European Union (EU), the Act marks one of the first serious attempts in the world at creating an overarching regulatory framework for AI.

The Act also complicates predictions of a bipolar AI world in which Washington and Beijing vie for dominance while the rest of the world looks on passively. Instead, the new EC draft regulations could steer us toward a tripolar AI landscape. While America and China are pushing the limits of AI in different ways, Europe is acting as the lead regulator to ensure that AI is deployed in safe and responsible ways.

Regulatory power is real power, and Brussels has already proven itself to be a highly influential rule–setter for technology. Recent European technology regulations—such as the General Data Protection Regulation (GDPR)—have been imitated by other countries, and the AI Act will likely have similar ripple effects.

However, that rule-setting role comes with limitations. Despite nods to “supporting innovation,” Europe’s AI Act is not focused on its AI ecosystem’s competitiveness. While AI legislation in the United States and China focus on building strong domestic capabilities, the EC regulation takes a different approach: set the regulatory bar high and require others to jump over it.

To understand the implications and limitations of Europe’s approach, we’ll first look at the act itself, and then tease out the likely impact on AI development globally.

What is the Act Actually Regulating?

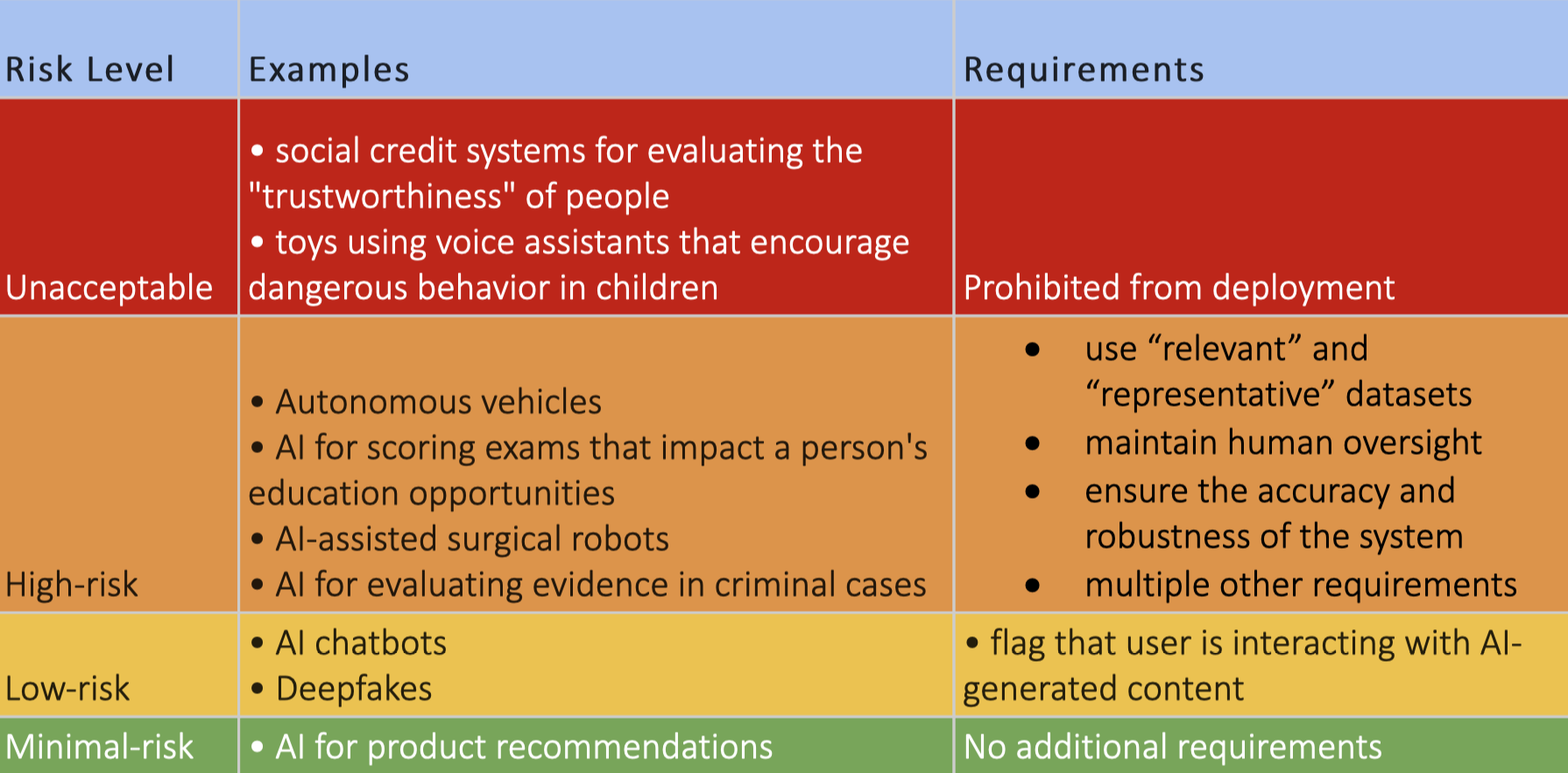

Categorizing and mitigating risk is the central organizing principle of the AI Act. It breaks down AI applications into four tiers of risk—unacceptable, high, low, and minimal—and then imposes different requirements on each tier (see Table 1).

Applications deemed to pose an unacceptable risk (e.g. social credit systems for determining the “trustworthiness” of people) are outright prohibited. Applications deemed low risk (e.g. AI chatbots) are subject to basic transparency requirements, while those considered minimal risk have no additional restrictions.

Table 1. Categories of Risk and Corresponding Requirements

Note: The dividing lines between these tiers, and the requirements in each tier, are likely to change as the EC draft is debated in the European Parliament.

Note: The dividing lines between these tiers, and the requirements in each tier, are likely to change as the EC draft is debated in the European Parliament.

Source: The AI Act.

Much of the novelty and potential impact of the regulation lies in its handling of “high risk” AI systems. This category includes AI systems for biometric surveillance (such as facial recognition), managing critical infrastructure, and screening migrants, among others. These types of systems are subject to strict “conformity assessments” carried out by organizations designated by EU member states. To pass those conformity assessments, high-risk AI systems must use “relevant” and “representative” datasets, maintain human oversight, and ensure the accuracy and robustness of the system, among other mandates.

For example, an AI system for detecting plagiarism in admissions essays would be considered “high risk” and submitted to a conformity assessment body that tests it on multiple requirements. Does the training data reflect the diversity of applicants? Does it disproportionately impact one subgroup? Does it accurately identify plagiarism, or could it be easily “tricked” by minor changes to the text? Only after being certified as conforming to these requirements could the system be placed on the market.

Ripples beyond Europe

When Brussels makes technology policy, the rest of the world takes notes. Its landmark data protection bill, GDPR, went into effect in 2018 and quickly became a touchstone for other countries. India’s 2019 Personal Data Protection Bill has substantial overlap with GDPR provisions around consent and erasure of user data. Chinese regulators, meanwhile, looked to GDPR as a model when crafting their own data law. Both countries made substantial changes in their own bills, but GDPR served as an anchor in policy development.

Even when other countries don’t follow Europe’s lead, companies often do. Both Facebook and Microsoft announced that they would voluntarily extend GDPR requirements to all their users worldwide. That voluntary extension may have been an attempt to preempt more restrictive policies, or an attempt to squeeze the most value out of a sunk cost: Microsoft claims it had 1,600 engineers working on GDPR compliance. The AI Act will likely spur similar activity, forcing companies to build out AI assessment tools and catalyzing advances in nascent fields such as AI interpretability.

But that same cost of compliance may disadvantage smaller European startups building AI-powered products. The extensive legal and engineering work needed to ensure compliance will likely be a burden—or even a deterrent—for small companies looking to deploy “high risk” AI systems in Europe.

Sweeping new regulations are often viewed as a way to rein in the power of big tech companies. But these regulations can end up becoming new moats around the existing tech empires by increasing the cost of entering the market. No company likes pouring thousands of employee hours into compliance work on a new regulation, but the tech giants—most of them American and Chinese—can more ably absorb that cost.

This doesn’t mean the European Union should scale back its ambitions for regulating AI. Pioneering a risk-based approach and catalyzing the assessment of AI systems will ultimately benefit Europeans and those around the globe. But if Brussels wants European institutions to lead in building those systems, it will need to set equally ambitious goals for fostering its own AI ecosystem.

Matt Sheehan is a Fellow at MacroPolo. You can find his work on tech policy, AI, and Silicon Valley here.

Stay Updated with MacroPolo

Get on our mailing list to keep up with our analysis and new products.

Subscribe