- November 19, 2024

Autonomous Driving: The Future is Getting Closer (Part I)

This piece is part of a series. Click to read Part 2 and Part 3.

Imagine this scene: a ragtag band of engineers, hobbyists, and tinkerers descend on southern California’s parched Mojave Desert with improvised driving machines. The challenge: get their homemade autonomous vehicles to complete a 150-mile track and win $1 million. None of them succeeded. The best-performing team from Carnegie Mellon University (CMU) got their machine to drive a meager 7.4 miles.

That was two decades ago, in 2004, when the Pentagon’s Defense Advanced Research Projects Agency (DARPA) dangled a giant jackpot to incentivize progress in autonomous driving (AD). The state of the technology still leaves much to be desired today, but DARPA’s gambit wasn’t for naught—two members of that pioneering CMU team would go on to become founders of Alphabet’s Waymo, the current leader in AD (see Figure 1).

Figure 1. Homemade Entrants for DARPA’s Inaugural 2004 Grand Challenge

Note: Clockwise from top left: “Sandstorm” from CMU’s “Red Team”, SciAutonics-II AVIDOR-2004, TerraMax, Team CIMAR.

Source: R. Behringer (2004), The DARPA grand challenge – autonomous ground vehicles in the desert.

Nonetheless, there has been no “ChatGPT moment” for AD, which is still perceived as more about selling promise than demonstrating capability. But intensifying competition between two distinct approaches to advancing AD has brought renewed energy to this frontier technology.

Commercializing AD will be much more transformative than integrating artificial intelligence (AI) into chatbots and search. From a technological standpoint, the realization of AD would pave the way for a robotics revolution. From an economic standpoint, AD could dramatically enhance output and productivity, in large part by giving time back to people who would otherwise use it for driving.

We believe AD will be the next front of technology competition, especially between the United States and China—two economies endowed with the companies, talent, resources, and auto industries to achieve breakthroughs in the field.

In Part One of this series, we analyze the technology and approaches behind AD progress and why achieving full AD is so difficult. Part Two will assess how evolving AD technology could affect the hardware supply chain. Part Three will evaluate the geopolitical and competitive issues industry leaders face in and between the United States and China.

Why Autonomous Driving Is So Hard

Full AD, defined as a vehicle that can navigate all roads in any condition without human intervention, has been elusive because its fundamental task is extremely difficult. It requires recreating not only human eyes (through cameras and sensors), but the myriad functions of a human brain (via a deep learning neural network) that make driving possible.

Just think about what it takes to drive a car. You have to process sensory data ranging from a truck’s imminent side swipe to kids and pets scurrying across the street or downpours that obscure visibility. Real-time reactions and adjustments require more instant hand-eye-feet coordination than most other human tasks.

Yet for humans, driving isn’t particularly onerous or difficult. Many of us start learning as teenagers and continue driving throughout our adult lives. Driving is so quotidian, even monotonous, that it is almost second nature for most people. That means full AD has to retain precision and adopt a human-like intuition.

To be commercially viable, full AD must attain a high degree of safety and social trust. For instance, can full AD handle treacherous airport drop-offs and pick-ups without fail? Or better yet, can it properly and safely navigate the bustling streets of Lahore?

As difficult as full AD may be to achieve, whoever masters it stands to not only transform the transportation sector but also revolutionize human lifestyles. More than 107 million Americans spend an average of 52 minutes driving for their daily commutes. Full AD could “return” over 24 billion hours to the American workforce per year.

People can reallocate that time into leisure or productivity. You could spend more time with your kids in the backseat before school rather than scold them from the driver’s seat. Or you could be putting the finishing touches on that pitch deck you couldn’t get to the night before.

This is what would make AD’s socioeconomic impact so staggering. In passenger transport alone, AD could generate $300-$400 billion in revenue by 2035. But its cumulative social benefits are estimated to be in the trillions of dollars, with huge upsides for improving total factor productivity. It will also likely generate national savings—for instance, an AD penetration rate of 25% in the United States is estimated to save billions in healthcare costs by preventing 1,442,000 accidents and 12,000 fatalities (e.g. those caused by drunk driving).

Two Approaches, Two Competitors

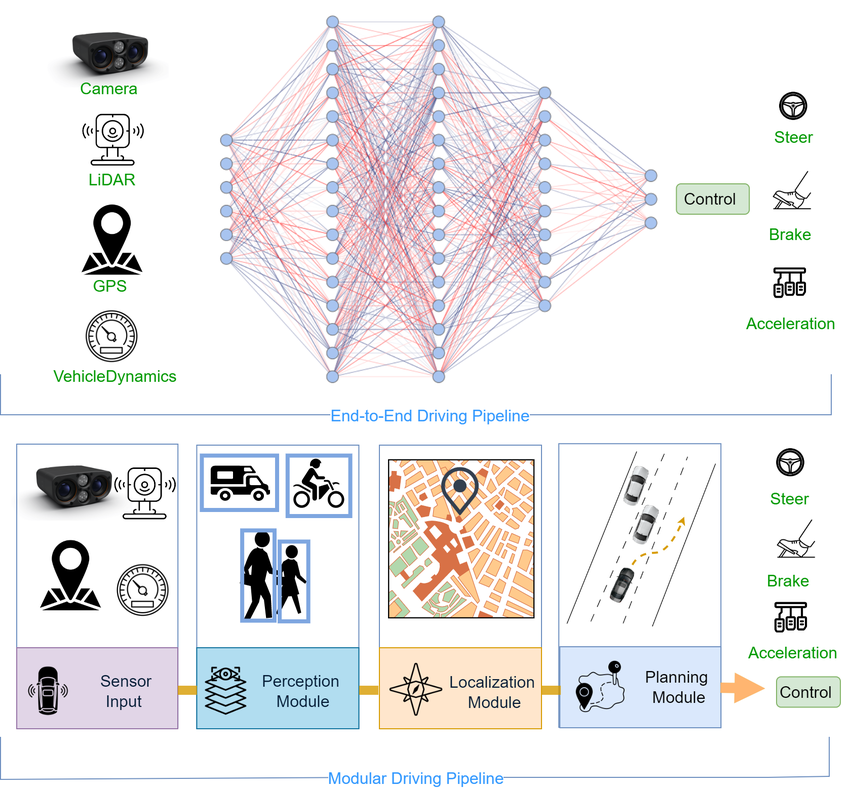

That future may sound far-fetched, but full AD is more plausible now than ever before. That’s thanks to two distinct approaches: modular architecture (Waymo) and end-to-end learning (Tesla)—both enabled by a technical breakthrough in the AI transformer model.

In modular architectures, separate transformers are deployed within different modules, each precisely trained for its specific task. For example, one transformer model can be dedicated to perceiving the external environment while another plans the safest path forward. A third transformer then controls the acceleration, brake, and steering.

The modular approach seeks to break down AD’s intrinsic complexity by confining each driving task into modules. Engineers can then refine and test the transformers in each module individually, making it easier to diagnose problems, adjust parameters, and ensure reliability. The irony, though, is that in trying to deal with AD’s complexity, the modular approach introduces additional process complexities.

By contrast, the end-to-end approach aims to simplify AD by using just a single transformer to power a unified neural network that translates raw sensor data into driving actions all at once. In essence, the end-to-end model is designed to more closely ape how a human naturally learns to drive and improves with real-world experience (see Figure 2).

Figure 2. End-to-End Unifies Data Processing in a Single Neural Network

Source: Chib, Pranav & Singh, Pravendra (2023). Recent Advancements in End-to-End Autonomous Driving using Deep Learning: A Survey.

The transformer model, which both approaches rely on, is the same foundational architecture behind large language models (LLMs) powering OpenAI’s ChatGPT.

It turns out transformers are also a powerful tool in computer vision, able to process complex, high-dimensional data and recognize patterns over space and time. A major upgrade from convolutional neural networks, transformers excel at analyzing vast streams of sensor data, such as camera images, lidar point clouds, and radar signals. These vision language models (VLMs) are indispensable for interpreting the constant real-world input sent into the vehicle’s “eyes.”

In other words, the emerging industry consensus appears to be that throwing an obscene amount of compute power and driving data behind VLMs will yield rapid performance gains.

But herein also lies the main difference between LLMs in generative AI and VLMs in AD: compute needs. The former require greater training workloads in data centers, but AD also relies on compute within the vehicle to react independently in real time. Referred to as “inference,” these on-board processing workloads require astronomical leading-edge compute power from custom chips.

For instance, a system with eight 8-megapixel cameras (recording at 30 fps) arrayed on all sides of a vehicle would generate 20 terabytes of data to fill over four thousand DVDs for every hour of driving. That means a fleet of just 40 robotaxis (Waymo’s fleet has hundreds of vehicles) would in one day generate the equivalent of all the data contained in the US Library of Congress.

Plus, inference inside cars (with no cloud to lean on) must work flawlessly—because its correct execution is a matter of life and death. Inference cannot have any latency in order for AD to match or best human drivers’ reaction time, which averages between 0.38 to 0.6 seconds.

Waymo’s Way or Jumping on the Tesla Train?

Perhaps it comes as no surprise that the top two AD competitors are vying to prove the feasibility of their respective approaches.

Waymo is optimizing its modular architecture, earning plaudits from regulators for transparency and trustworthiness. Tesla, meanwhile, is all-in on the end-to-end approach that its engineers believe will lead to superior and faster results, even if it means compromising on transparency.

Waymo’s system allows for detailed verification and error tracing when things go wrong. But because the architecture is based on modules, adjusting one module means all the others need to be recalibrated to ensure alignment and compatibility. This process can be more onerous, and over AD’s long development horizons may eventually slow the integration of the latest AI techniques.

End-to-end, however, relies on a single integrated neural network that enables faster iteration of AD advances. Trained on enough scenario-specific driving data, it could develop better responsiveness to rare or irregular incidents like yielding to an ambulance tearing through red lights.

Both models clearly have their merits—and have plenty of overlap. But the end-to-end model appears to hold more promise for advancements because of its inherent agility. Tesla also has the formidable scaling advantage of having millions of its own cars on which to deploy AD features and gather real-time data. That gives Tesla a considerable edge in making progress on AD, so long as constraints on compute and data can be overcome.

Take Tesla’s latest software update in March 2024. Based on end-to-end, its Full Self Driving 12.3 demonstrated a step change in capability compared to previous versions, further validating the ability of end-to-end to push toward the AD frontier.

Industry competitors, particularly Chinese players, took notice. In fact, companies like Li Auto, NIO, Xiaomi, and Huawei, among others, all rapidly reorganized their AD units and band-wagoned onto the end-to-end model. Even Waymo is hedging—it just released new research exploring integrating end-to-end earlier this month.

Despite Waymo’s first-mover advantage and solid operating record, Tesla’s recent progress suggest that it may emerge as a leading bellwether of progress in AD. But even if full AD is achieved tomorrow, its scalability and commercialization will be determined by cost. Robotaxi fleets, one of the most obvious AD use cases, simply won’t work if each vehicle costs $100,000. That is why the AD technology stack—a complex integration of hardware components and software—will be crucial in striking a balance between performance and cost.

In part two, we explore how key companies in the AD technology stack are competing to bring self-driving cars to market, focusing on sensor hardware, advanced chips for inference, and supercomputing clusters for training VLMs.

AJ Cortese is a senior research associate at MacroPolo. You can find his work on industrial technology, semiconductors, the digital economy, and other topics here.

The author would like to thank Cedar Liu and Guanzheng Sun for excellent research assistance.

Stay Updated with MacroPolo

Get on our mailing list to keep up with our analysis and new products.

Subscribe